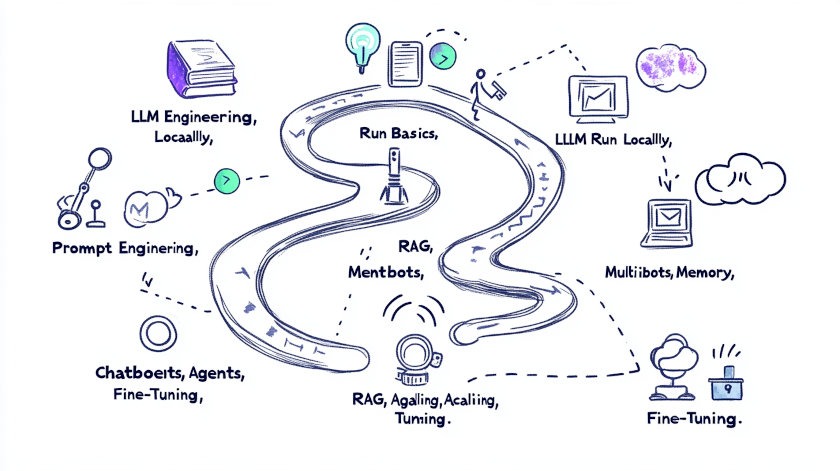

LLM Learning Roadmap for software developers

Stage-by-stage roadmap to mastering LLM technology, from foundational concepts to advanced, production-ready solutions

Large Language Models (LLMs) are transforming the landscape of software engineering, powering intelligent chatbots, retrieval-augmented systems, and autonomous agents. For developers aiming to harness these capabilities using modern frameworks like Spring AI, PGVector, and Ollama, a structured learning path is essential. This article presents a comprehensive, stage-by-stage roadmap to mastering LLM technology, from foundational concepts to advanced, production-ready solutions.

1. Grasping LLM Fundamentals

Understanding the Architecture

Begin by exploring the theoretical underpinnings of LLMs:

- Learn the distinctions between transformer architectures: encoders, decoders, and sequence-to-sequence models.

- Study tokenization-the process of converting text into numerical representations.

- Dive into attention mechanisms and their role in contextual understanding.

- Understand decoding strategies, including prefill and decode phases of inference.

Practical Exploration

- Use visualization tools to observe LLM internals.

- Enroll in foundational LLM courses, such as those offered by Hugging Face.

- ✅ Open AI API

2. Running LLMs Locally with Ollama

Installation and Setup

- Download and install Ollama, a platform for running LLMs locally.

- Familiarize yourself with basic command-line operations.

Model Deployment and Optimization

- Launch your first model (e.g., Qwen 3 8B) and assess hardware requirements.

- Experiment with quantization (such as GGUF format) to optimize for speed and resource usage.

- Adjust launch parameters to balance quality and performance.

3. Prompt Engineering and Tuning

Building Effective Prompts

- Master the principles of prompt engineering for clear, actionable queries.

- Learn to structure prompts by separating instructions, context, and questions.

Iterative Improvement

- Test and refine prompts based on model responses.

- Develop a library of prompts tailored to various tasks and evaluate their effectiveness.

4. Building RESTful Chatbots with Spring AI

Spring AI Integration

- Set up a Spring Boot project and integrate Spring AI dependencies.

- Configure connections to local LLMs via Ollama’s API.

API Development

- Implement REST controllers for chatbot interactions.

- Design a service layer to handle LLM communication.

- Test endpoints using tools like Postman or a simple frontend.

5. Implementing Chat Memory

Conversation History Management

- Design data structures and set up a PostgreSQL database for storing chat histories.

- Integrate message history into LLM queries to maintain conversational context.

- Develop strategies to manage context window limitations efficiently.

6. Enabling Retrieval-Augmented Generation (RAG)

Vector Database Integration

- Install PostgreSQL with the PGVector extension for vector storage.

- Create tables and indexes for embedding storage and similarity search.

Embedding and Retrieval

- Use Spring AI’s EmbeddingClient to generate document embeddings.

- Implement logic to retrieve relevant documents based on vector similarity and inject them into model prompts.

- Optimize retrieval with advanced indexing techniques like HNSW.

7. Expanding with Tools and Agents

Function Calling and Tool Integration

- Enable LLMs to invoke Java functions through structured prompts.

- Design agents capable of selecting and using tools based on user intent.

Agent Architecture

- Develop decision-making mechanisms and test agents on real tasks.

- Optimize agent workflows for accuracy and efficiency.

8. Orchestrating Multi-Agent and Multi-Chain Processing

Complex Workflow Design

- Build architectures for sequential and parallel processing chains.

- Implement protocols for agent-to-agent communication and coordination.

Scenario Testing

- Simulate multi-agent collaboration on complex tasks and refine system performance.

9. Connecting External Services and Scaling

API and Cloud Integration

- Connect to external APIs (OpenAI, cloud LLMs) and design abstractions for model switching.

- Expand capabilities to support multimodal inputs (images, audio) and additional external services.

Performance and Scalability

- Implement caching and load balancing strategies.

- Architect the system for horizontal scaling and high availability.

10. Fine-Tuning LLMs for Custom Needs

Data Preparation and Training

- Collect and preprocess datasets for domain-specific fine-tuning.

- Split data into training and validation sets.

Parameter-Efficient Fine-Tuning

- Apply techniques like LoRA and QLoRA to fine-tune models efficiently.

- Tune hyperparameters and evaluate model performance iteratively.

Learning Resources and Practical Projects

Recommended Resources

- Hugging Face courses on LLMs and agents

- Spring AI and Ollama documentation

- Tutorials on RAG, PGVector, and vector databases

Hands-On Projects

- Local chatbot with prompt understanding

- REST API for LLM interaction

- Chatbot with persistent memory

- RAG-based Q&A system

- Tool-using agents

- Multi-agent orchestration

- Fine-tuned, domain-specific LLMs

- Full-featured assistants integrating multiple data sources

Conclusion

This roadmap provides a clear, actionable path for software developers to master LLM technologies using state-of-the-art frameworks. By progressing through these stages and consolidating knowledge with practical projects, developers can build robust, scalable, and intelligent AI applications tailored to real-world needs.

Useful materials

Authors:

- Sinan Ozdemir - AI and LLM Expert, Author, Educator

- Paulo Dichone - Software Engineer, AWS Cloud Practitioner & Instructor

- Rob Barton & Jerome Henry - Experts in AI & ML Tools for Deep Learning, LLMs, and More

- Ken Kousen - Integrating AI in Java Projects

- Arnold Oberleiter - A passionate lecturer in the field of Artificial Intelligence.

Published on 12/20/2024