Articles

15-Jun-2025

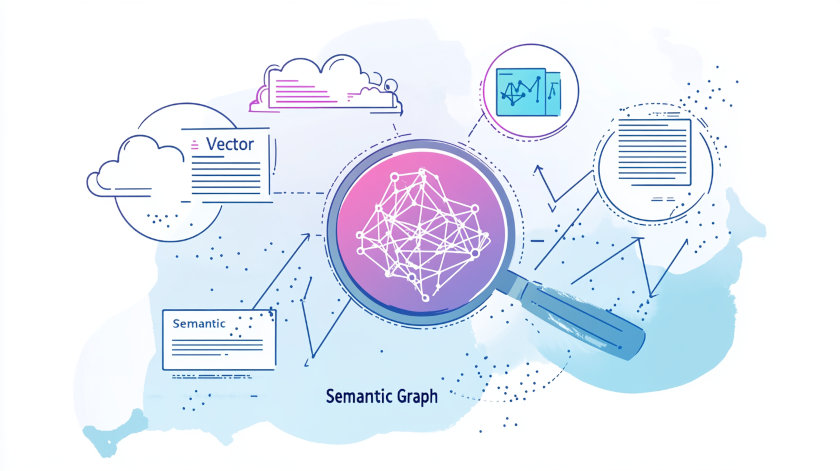

Unified AI Data Architecture for Legal Research: How PostgreSQL, PgVector, and Apache AGE Power Next-Gen Copilots

A detailed breakdown of the architecture behind the GraphRAG Legal Cases project using Azure Database for PostgreSQL, Apache AGE, and advanced AI/ML services. Learn how vector search, semantic ranking, and graph-based retrieval are combined to deliver high-quality legal research solutions at scale.

29-May-2025

Test results for local running LLMs using Ollama AMD Ryzen 7 8745HS

Local LLMs Benchmark data on GPU: AMD Ryzen 7 8745HS 16/64 Gb RAM

27-May-2025

How to Run LLMs Locally: A Complete Step-by-Step Guide

Unlock the power of AI on your own hardware with this comprehensive guide to running large language models (LLMs) locally. Learn about privacy, hardware requirements, quantization, and practical tools like LM Studio and Ollama for private, cost-effective, and customizable AI.

26-May-2025

Hardware Options for Running Local LLMs 2025

Explore the optimal hardware for running large language models (LLMs) locally, from entry-level edge devices like NVIDIA Jetson Orin Nano Super to powerful GPUs, Apple M3 Ultra Mac Studio, and modern CPUs like AMD Ryzen 7 8745HS. Learn how to choose the right setup for efficient AI performance, balancing cost, speed, and scalability.

25-May-2025

How benchmark local LLMs

A practical benchmarking local LLMs using Ollama: covers script automation, hardware detection, performance metrics

24-May-2025

Test results for local running LLMs using Ollama on RTX4060 Ti

Local LLMs Benchmark data sorted by evaluation performance

20-Dec-2024

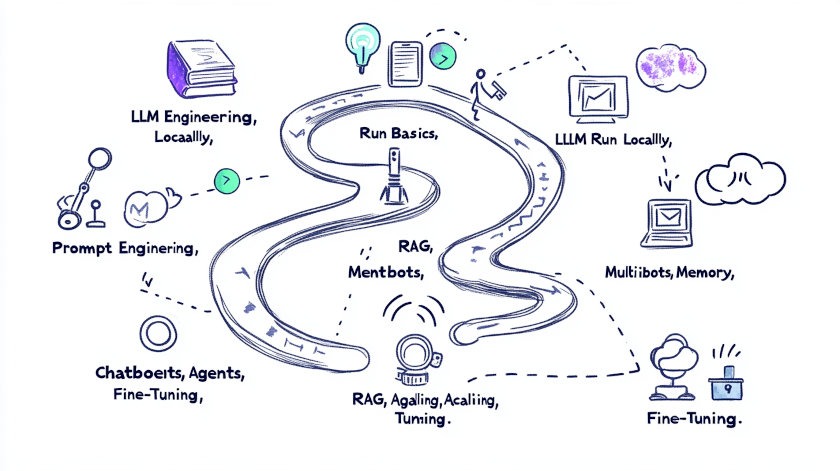

LLM Learning Roadmap for software developers

Master LLMs with a step-by-step roadmap: Spring AI, Ollama, RAG, prompt engineering, agents, and fine-tuning for scalable AI apps